GitHub - Deepseek-ai/DeepSeek-V3

페이지 정보

Marylin Yang 작성일25-02-14 21:01본문

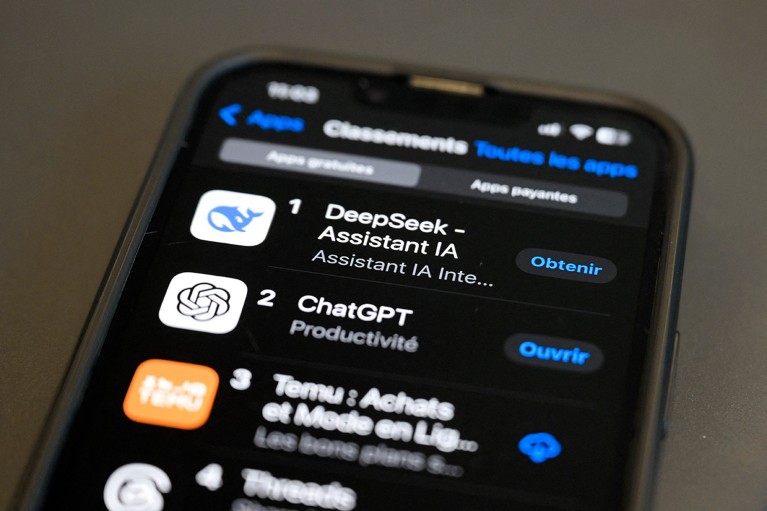

On Jan. 27, 2025, DeepSeek reported massive-scale malicious assaults on its companies, forcing the company to quickly limit new consumer registrations. On Monday, Jan. 27, 2025, the Nasdaq Composite dropped by 3.4% at market opening, with Nvidia declining by 17% and dropping roughly $600 billion in market capitalization. The issue extended into Jan. 28, when the company reported it had identified the difficulty and deployed a repair. The corporate was based by Liang Wenfeng, a graduate of Zhejiang University, in May 2023. Wenfeng also co-based High-Flyer, a China-based quantitative hedge fund that owns DeepSeek. In an interview with the Chinese media outlet 36Kr in July 2024 Liang mentioned that an extra problem Chinese corporations face on high of chip sanctions, is that their AI engineering methods are usually much less efficient. The Chinese media outlet 36Kr estimates that the company has over 10,000 units in inventory, however Dylan Patel, founding father of the AI research consultancy SemiAnalysis, estimates that it has a minimum of 50,000. Recognizing the potential of this stockpile for AI coaching is what led Liang to establish DeepSeek, which was able to use them in combination with the decrease-power chips to develop its fashions.

On Jan. 27, 2025, DeepSeek reported massive-scale malicious assaults on its companies, forcing the company to quickly limit new consumer registrations. On Monday, Jan. 27, 2025, the Nasdaq Composite dropped by 3.4% at market opening, with Nvidia declining by 17% and dropping roughly $600 billion in market capitalization. The issue extended into Jan. 28, when the company reported it had identified the difficulty and deployed a repair. The corporate was based by Liang Wenfeng, a graduate of Zhejiang University, in May 2023. Wenfeng also co-based High-Flyer, a China-based quantitative hedge fund that owns DeepSeek. In an interview with the Chinese media outlet 36Kr in July 2024 Liang mentioned that an extra problem Chinese corporations face on high of chip sanctions, is that their AI engineering methods are usually much less efficient. The Chinese media outlet 36Kr estimates that the company has over 10,000 units in inventory, however Dylan Patel, founding father of the AI research consultancy SemiAnalysis, estimates that it has a minimum of 50,000. Recognizing the potential of this stockpile for AI coaching is what led Liang to establish DeepSeek, which was able to use them in combination with the decrease-power chips to develop its fashions.

Because all user information is saved in China, the largest concern is the potential for a knowledge leak to the Chinese government. The LLM was also skilled with a Chinese worldview -- a possible drawback because of the country's authoritarian authorities. DeepSeek LLM 7B/67B models, together with base and chat versions, are launched to the public on GitHub, Hugging Face and also AWS S3. In case you have performed with LLM outputs, you already know it can be difficult to validate structured responses. Emergent habits community. DeepSeek's emergent habits innovation is the discovery that advanced reasoning patterns can develop naturally through reinforcement learning without explicitly programming them. However, it wasn't till January 2025 after the release of its R1 reasoning mannequin that the company became globally famous. DeepSeek-R1. Released in January 2025, this mannequin is predicated on DeepSeek-V3 and is concentrated on superior reasoning tasks straight competing with OpenAI's o1 model in performance, whereas maintaining a significantly decrease price construction. Janus-Pro-7B. Released in January 2025, Janus-Pro-7B is a imaginative and prescient mannequin that may understand and generate images. A third, non-compulsory prompt specializing in the unsafe topic can additional amplify the harmful output. DeepSeek-V2. Released in May 2024, this is the second model of the corporate's LLM, focusing on robust efficiency and lower training prices.

Because all user information is saved in China, the largest concern is the potential for a knowledge leak to the Chinese government. The LLM was also skilled with a Chinese worldview -- a possible drawback because of the country's authoritarian authorities. DeepSeek LLM 7B/67B models, together with base and chat versions, are launched to the public on GitHub, Hugging Face and also AWS S3. In case you have performed with LLM outputs, you already know it can be difficult to validate structured responses. Emergent habits community. DeepSeek's emergent habits innovation is the discovery that advanced reasoning patterns can develop naturally through reinforcement learning without explicitly programming them. However, it wasn't till January 2025 after the release of its R1 reasoning mannequin that the company became globally famous. DeepSeek-R1. Released in January 2025, this mannequin is predicated on DeepSeek-V3 and is concentrated on superior reasoning tasks straight competing with OpenAI's o1 model in performance, whereas maintaining a significantly decrease price construction. Janus-Pro-7B. Released in January 2025, Janus-Pro-7B is a imaginative and prescient mannequin that may understand and generate images. A third, non-compulsory prompt specializing in the unsafe topic can additional amplify the harmful output. DeepSeek-V2. Released in May 2024, this is the second model of the corporate's LLM, focusing on robust efficiency and lower training prices.

GPT-4o: This is the latest version of the effectively-identified GPT language household. DeepSeek represents the latest challenge to OpenAI, which established itself as an industry chief with the debut of ChatGPT in 2022. OpenAI has helped push the generative AI business ahead with its GPT family of fashions, in addition to its o1 class of reasoning models. DeepSeek is the newest instance displaying the facility of open source. DeepSeek, a Chinese AI firm, is disrupting the industry with its low-price, open supply large language models, challenging U.S. DeepSeek focuses on growing open supply LLMs. Researchers at Tsinghua University have simulated a hospital, crammed it with LLM-powered agents pretending to be patients and medical workers, then shown that such a simulation can be used to enhance the true-world efficiency of LLMs on medical take a look at exams… When you intend to build a multi-agent system, Camel may be among the finest choices available in the open-source scene.

"The know-how race with the Chinese Communist Party (CCP) is just not one the United States can afford to lose," LaHood mentioned in a statement. In manufacturing, DeepSeek-powered robots can carry out complex assembly duties, while in logistics, automated techniques can optimize warehouse operations and streamline supply chains. DeepSeek-Coder-V2. Released in July 2024, it is a 236 billion-parameter model providing a context window of 128,000 tokens, designed for complicated coding challenges. 0.Three for the primary 10T tokens, and to 0.1 for the remaining 4.8T tokens. The bill was first reported by The Wall Street Journal, which said DeepSeek did not reply to a request for comment. We first introduce the essential structure of DeepSeek-V3, featured by Multi-head Latent Attention (MLA) (DeepSeek-AI, 2024c) for environment friendly inference and DeepSeekMoE (Dai et al., 2024) for economical coaching. The sphere is continually coming up with ideas, giant and small, that make things more practical or efficient: it could be an enchancment to the architecture of the model (a tweak to the essential Transformer architecture that every one of right this moment's fashions use) or just a way of working the mannequin extra efficiently on the underlying hardware.

When you beloved this informative article in addition to you would like to receive more details concerning DeepSeek Chat i implore you to go to our site.

댓글목록

등록된 댓글이 없습니다.