Try These 5 Issues If you First Begin Deepseek (Because of Science)

페이지 정보

Sadie 작성일25-02-01 12:55본문

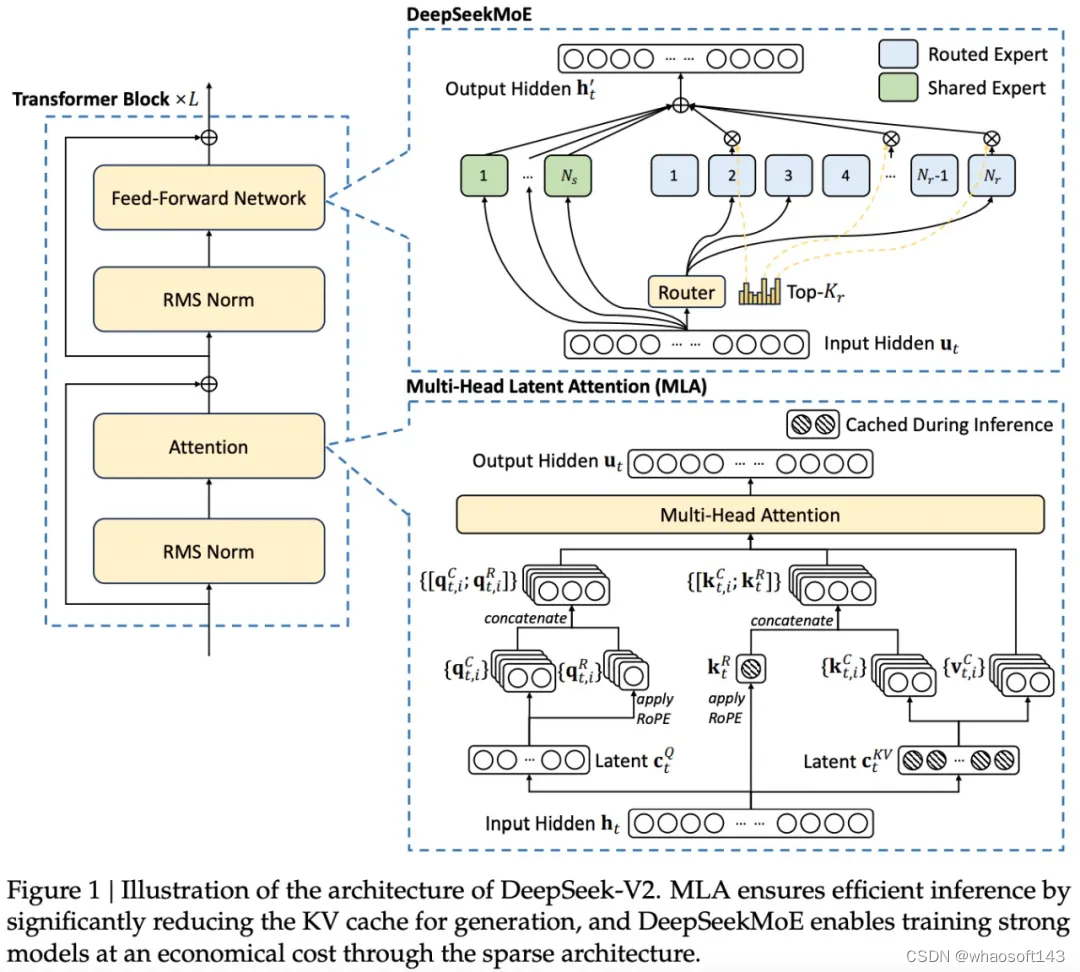

DeepSeek claimed the mannequin coaching took 2,788 thousand H800 GPU hours, which, at a cost of $2/GPU hour, comes out to a mere $5.576 million. What makes DeepSeek so particular is the corporate's declare that it was built at a fraction of the cost of trade-main models like OpenAI - because it makes use of fewer superior chips. A world the place Microsoft gets to supply inference to its customers for a fraction of the cost means that Microsoft has to spend much less on information centers and GPUs, or, just as doubtless, sees dramatically increased usage on condition that inference is so much cheaper. Context windows are particularly costly in terms of memory, as every token requires both a key and corresponding value; DeepSeekMLA, or multi-head latent attention, makes it possible to compress the important thing-worth retailer, dramatically reducing memory utilization during inference. H800s, nonetheless, are Hopper GPUs, they just have much more constrained memory bandwidth than H100s because of U.S. Scale AI CEO Alexandr Wang said they've 50,000 H100s. In an interview with CNBC final week, Alexandr Wang, CEO of Scale AI, also forged doubt on DeepSeek’s account, saying it was his "understanding" that it had entry to 50,000 extra advanced H100 chips that it couldn't speak about attributable to US export controls.

DeepSeek claimed the mannequin coaching took 2,788 thousand H800 GPU hours, which, at a cost of $2/GPU hour, comes out to a mere $5.576 million. What makes DeepSeek so particular is the corporate's declare that it was built at a fraction of the cost of trade-main models like OpenAI - because it makes use of fewer superior chips. A world the place Microsoft gets to supply inference to its customers for a fraction of the cost means that Microsoft has to spend much less on information centers and GPUs, or, just as doubtless, sees dramatically increased usage on condition that inference is so much cheaper. Context windows are particularly costly in terms of memory, as every token requires both a key and corresponding value; DeepSeekMLA, or multi-head latent attention, makes it possible to compress the important thing-worth retailer, dramatically reducing memory utilization during inference. H800s, nonetheless, are Hopper GPUs, they just have much more constrained memory bandwidth than H100s because of U.S. Scale AI CEO Alexandr Wang said they've 50,000 H100s. In an interview with CNBC final week, Alexandr Wang, CEO of Scale AI, also forged doubt on DeepSeek’s account, saying it was his "understanding" that it had entry to 50,000 extra advanced H100 chips that it couldn't speak about attributable to US export controls.

The ultimate team is accountable for restructuring Llama, presumably to repeat DeepSeek’s functionality and success. Critically, DeepSeekMoE additionally introduced new approaches to load-balancing and routing during training; traditionally MoE elevated communications overhead in training in alternate for environment friendly inference, however DeepSeek’s method made training extra efficient as effectively. Moreover, in case you truly did the math on the earlier query, you'd notice that DeepSeek truly had an excess of computing; that’s because deepseek ai china really programmed 20 of the 132 processing items on every H800 particularly to handle cross-chip communications. The key implications of those breakthroughs - and the half you want to know - only grew to become obvious with V3, which added a brand new method to load balancing (further lowering communications overhead) and multi-token prediction in training (further densifying each coaching step, once more decreasing overhead): V3 was shockingly low-cost to prepare. Some fashions, like GPT-3.5, activate all the model during both training and inference; it seems, however, that not each part of the model is critical for the subject at hand. This is the way you get fashions like GPT-4 Turbo from GPT-4. MoE splits the model into a number of "experts" and solely activates the ones which might be mandatory; GPT-4 was a MoE mannequin that was believed to have sixteen consultants with approximately a hundred and ten billion parameters every.

Trying multi-agent setups. I having one other LLM that may correct the first ones mistakes, or enter into a dialogue where two minds reach a better final result is completely attainable. "DeepSeekMoE has two key ideas: segmenting consultants into finer granularity for constrained to the H800; if DeepSeek had entry to H100s, they probably would have used a bigger coaching cluster with a lot fewer optimizations particularly targeted on overcoming the lack of bandwidth. Distillation is less complicated for an organization to do on its own models, as a result of they've full access, however you can nonetheless do distillation in a considerably extra unwieldy manner through API, and even, in case you get creative, by way of chat clients. Distillation seems terrible for main edge fashions. Distillation clearly violates the terms of service of various models, but the one technique to stop it's to actually reduce off access, through IP banning, fee limiting, and many others. It’s assumed to be widespread in terms of model training, and is why there are an ever-growing number of fashions converging on GPT-4o high quality.

If you enjoyed this short article and you would such as to receive additional info concerning ديب سيك kindly see the web site.

댓글목록

등록된 댓글이 없습니다.