Why Deepseek Is no Friend To Small Business

페이지 정보

Hamish 작성일25-02-01 12:21본문

Yes, DeepSeek has encountered challenges, together with a reported cyberattack that led the company to restrict new person registrations quickly. This focus allows the company to concentrate on advancing foundational AI applied sciences without quick industrial pressures. DeepSeek-V2 collection (including Base and Chat) supports commercial use. Evaluation outcomes present that, even with only 21B activated parameters, DeepSeek-V2 and its chat versions nonetheless achieve prime-tier performance amongst open-supply fashions. Since launch, we’ve additionally gotten confirmation of the ChatBotArena ranking that places them in the highest 10 and over the likes of current Gemini pro fashions, Grok 2, o1-mini, and many others. With solely 37B energetic parameters, that is extraordinarily appealing for a lot of enterprise applications. It includes 236B complete parameters, of which 21B are activated for every token, and supports a context size of 128K tokens. What are DeepSeek's future plans? Nvidia's stock bounced again by nearly 9% on Tuesday, signaling renewed confidence in the corporate's future. Therefore, we advocate future chips to assist effective-grained quantization by enabling Tensor Cores to obtain scaling components and implement MMA with group scaling. By leveraging a vast amount of math-associated internet data and introducing a novel optimization technique known as Group Relative Policy Optimization (GRPO), the researchers have achieved spectacular results on the difficult MATH benchmark.

Yes, DeepSeek has encountered challenges, together with a reported cyberattack that led the company to restrict new person registrations quickly. This focus allows the company to concentrate on advancing foundational AI applied sciences without quick industrial pressures. DeepSeek-V2 collection (including Base and Chat) supports commercial use. Evaluation outcomes present that, even with only 21B activated parameters, DeepSeek-V2 and its chat versions nonetheless achieve prime-tier performance amongst open-supply fashions. Since launch, we’ve additionally gotten confirmation of the ChatBotArena ranking that places them in the highest 10 and over the likes of current Gemini pro fashions, Grok 2, o1-mini, and many others. With solely 37B energetic parameters, that is extraordinarily appealing for a lot of enterprise applications. It includes 236B complete parameters, of which 21B are activated for every token, and supports a context size of 128K tokens. What are DeepSeek's future plans? Nvidia's stock bounced again by nearly 9% on Tuesday, signaling renewed confidence in the corporate's future. Therefore, we advocate future chips to assist effective-grained quantization by enabling Tensor Cores to obtain scaling components and implement MMA with group scaling. By leveraging a vast amount of math-associated internet data and introducing a novel optimization technique known as Group Relative Policy Optimization (GRPO), the researchers have achieved spectacular results on the difficult MATH benchmark.

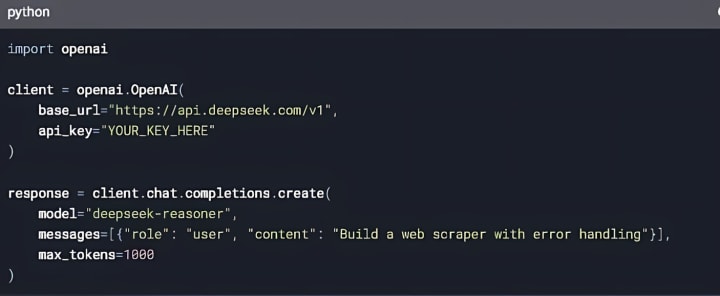

These APIs permit software developers to combine OpenAI's sophisticated AI fashions into their own purposes, provided they have the suitable license within the form of a professional subscription of $200 per 30 days. The use of DeepSeekMath fashions is subject to the Model License. Why this matters - language fashions are a broadly disseminated and understood technology: Papers like this show how language models are a class of AI system that could be very properly understood at this point - there at the moment are numerous teams in countries world wide who have shown themselves in a position to do finish-to-finish growth of a non-trivial system, from dataset gathering by means of to structure design and subsequent human calibration. These factors are distance 6 apart. However the stakes for Chinese developers are even greater. In actual fact, the emergence of such efficient fashions could even increase the market and finally increase demand for Nvidia's advanced processors. Are there issues regarding DeepSeek's AI models? DeepSeek-R1-Distill fashions are fine-tuned primarily based on open-source fashions, utilizing samples generated by DeepSeek-R1.

These APIs permit software developers to combine OpenAI's sophisticated AI fashions into their own purposes, provided they have the suitable license within the form of a professional subscription of $200 per 30 days. The use of DeepSeekMath fashions is subject to the Model License. Why this matters - language fashions are a broadly disseminated and understood technology: Papers like this show how language models are a class of AI system that could be very properly understood at this point - there at the moment are numerous teams in countries world wide who have shown themselves in a position to do finish-to-finish growth of a non-trivial system, from dataset gathering by means of to structure design and subsequent human calibration. These factors are distance 6 apart. However the stakes for Chinese developers are even greater. In actual fact, the emergence of such efficient fashions could even increase the market and finally increase demand for Nvidia's advanced processors. Are there issues regarding DeepSeek's AI models? DeepSeek-R1-Distill fashions are fine-tuned primarily based on open-source fashions, utilizing samples generated by DeepSeek-R1.

The size of knowledge exfiltration raised red flags, prompting concerns about unauthorized access and potential misuse of OpenAI's proprietary AI models. All of which has raised a essential query: despite American sanctions on Beijing’s means to entry advanced semiconductors, is China catching up with the U.S. Despite these points, existing customers continued to have entry to the service. The past few days have served as a stark reminder of the unstable nature of the AI business. Up until this level, High-Flyer produced returns that have been 20%-50% greater than inventory-market benchmarks up to now few years. Currently, DeepSeek operates as an independent AI research lab beneath the umbrella of High-Flyer. Currently, DeepSeek is focused solely on research and has no detailed plans for commercialization. How has DeepSeek affected international AI improvement? Additionally, there are fears that the AI system might be used for international influence operations, spreading disinformation, surveillance, and the event of cyberweapons for the Chinese authorities. Experts point out that while DeepSeek's cost-efficient model is impressive, it doesn't negate the crucial position Nvidia's hardware performs in AI improvement. MLA ensures environment friendly inference by way of considerably compressing the key-Value (KV) cache into a latent vector, while DeepSeekMoE enables coaching strong models at an economical price via sparse computation.

DeepSeek-V2 adopts innovative architectures together with Multi-head Latent Attention (MLA) and DeepSeekMoE. Applications: Diverse, including graphic design, schooling, creative arts, and conceptual visualization. For those not terminally on twitter, a number of people who find themselves massively pro AI progress and anti-AI regulation fly under the flag of ‘e/acc’ (quick for ‘effective accelerationism’). He’d let the car publicize his location and so there have been individuals on the road taking a look at him as he drove by. So loads of open-supply work is things that you may get out quickly that get curiosity and get more individuals looped into contributing to them versus quite a lot of the labs do work that is perhaps less relevant within the brief term that hopefully turns right into a breakthrough later on. You need to get the output "Ollama is operating". This arrangement allows the bodily sharing of parameters and gradients, of the shared embedding and output head, between the MTP module and the main model. The potential knowledge breach raises critical questions about the safety and integrity of AI knowledge sharing practices. While this approach might change at any moment, primarily, DeepSeek has put a strong AI model within the fingers of anybody - a possible menace to national security and elsewhere.

If you loved this article therefore you would like to obtain more info about ديب سيك nicely visit our website.

댓글목록

등록된 댓글이 없습니다.