The Hollistic Aproach To Deepseek

페이지 정보

Clair 작성일25-02-01 00:50본문

Jack Clark Import AI publishes first on Substack DeepSeek makes the best coding model in its class and releases it as open supply:… To test our understanding, we’ll perform a couple of simple coding tasks, examine the assorted strategies in achieving the desired outcomes, and likewise show the shortcomings. The deepseek-coder mannequin has been upgraded to deepseek ai-Coder-V2-0614, considerably enhancing its coding capabilities. DeepSeek-R1-Zero demonstrates capabilities resembling self-verification, reflection, and producing long CoTs, marking a big milestone for the analysis group. • We are going to explore more comprehensive and multi-dimensional mannequin analysis methods to forestall the tendency towards optimizing a hard and fast set of benchmarks throughout research, which can create a misleading impression of the model capabilities and have an effect on our foundational evaluation. Read more: A Preliminary Report on DisTrO (Nous Research, GitHub). Read extra: Diffusion Models Are Real-Time Game Engines (arXiv). Read extra: deepseek ai china LLM: Scaling Open-Source Language Models with Longtermism (arXiv). Read extra: A short History of Accelerationism (The Latecomer).

That evening, he checked on the nice-tuning job and read samples from the model. Google has built GameNGen, a system for getting an AI system to study to play a game after which use that knowledge to prepare a generative model to generate the sport. An especially exhausting take a look at: Rebus is challenging as a result of getting appropriate answers requires a combination of: multi-step visible reasoning, spelling correction, world information, grounded image recognition, understanding human intent, and the ability to generate and test multiple hypotheses to arrive at a right answer. "Unlike a typical RL setup which makes an attempt to maximize sport score, our aim is to generate coaching knowledge which resembles human play, or at the least incorporates enough diverse examples, in a wide range of eventualities, to maximize training knowledge effectivity. What they did: They initialize their setup by randomly sampling from a pool of protein sequence candidates and selecting a pair that have excessive fitness and low modifying distance, then encourage LLMs to generate a brand new candidate from either mutation or crossover.

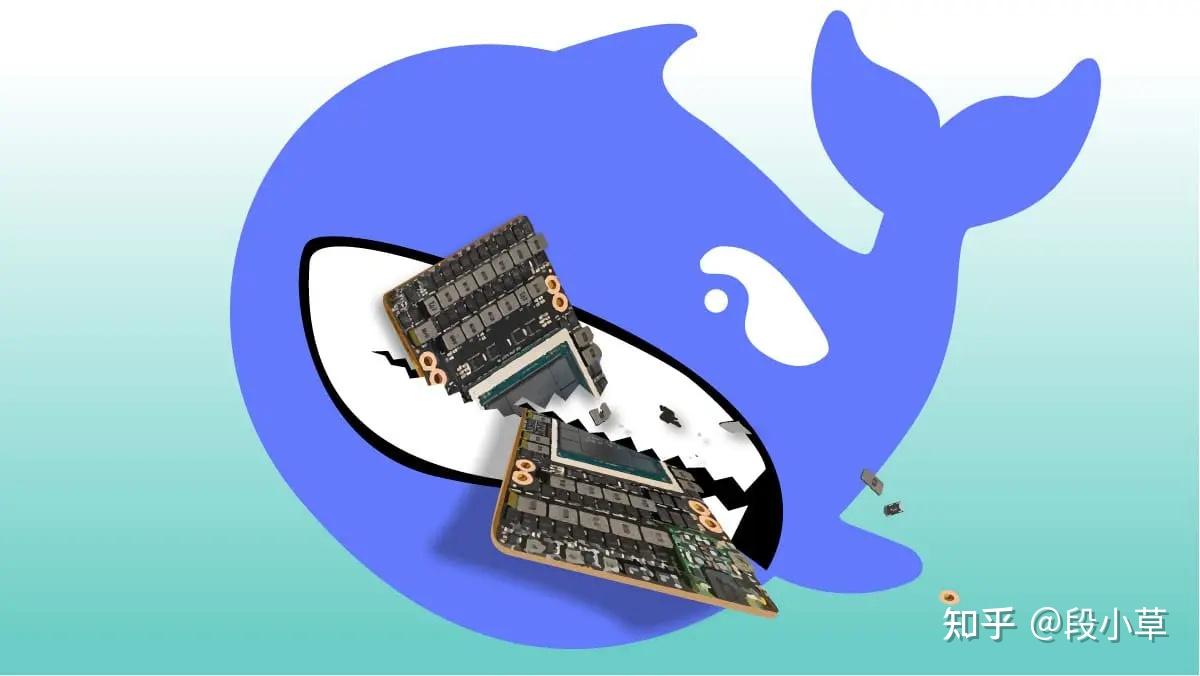

This should be appealing to any developers working in enterprises which have information privateness and sharing issues, but still need to improve their developer productivity with locally running fashions. 4. SFT DeepSeek-V3-Base on the 800K synthetic information for 2 epochs. DeepSeek-R1-Zero & DeepSeek-R1 are trained based mostly on DeepSeek-V3-Base. DeepSeek-R1. Released in January 2025, this model relies on DeepSeek-V3 and is concentrated on superior reasoning duties instantly competing with OpenAI's o1 mannequin in efficiency, whereas sustaining a considerably decrease cost construction. "Smaller GPUs current many promising hardware traits: they have much lower price for fabrication and packaging, greater bandwidth form-data; name="wr_link2"

This should be appealing to any developers working in enterprises which have information privateness and sharing issues, but still need to improve their developer productivity with locally running fashions. 4. SFT DeepSeek-V3-Base on the 800K synthetic information for 2 epochs. DeepSeek-R1-Zero & DeepSeek-R1 are trained based mostly on DeepSeek-V3-Base. DeepSeek-R1. Released in January 2025, this model relies on DeepSeek-V3 and is concentrated on superior reasoning duties instantly competing with OpenAI's o1 mannequin in efficiency, whereas sustaining a considerably decrease cost construction. "Smaller GPUs current many promising hardware traits: they have much lower price for fabrication and packaging, greater bandwidth form-data; name="wr_link2"

댓글목록

등록된 댓글이 없습니다.