Why Most Deepseek Fail

페이지 정보

Hassie 작성일25-01-31 15:03본문

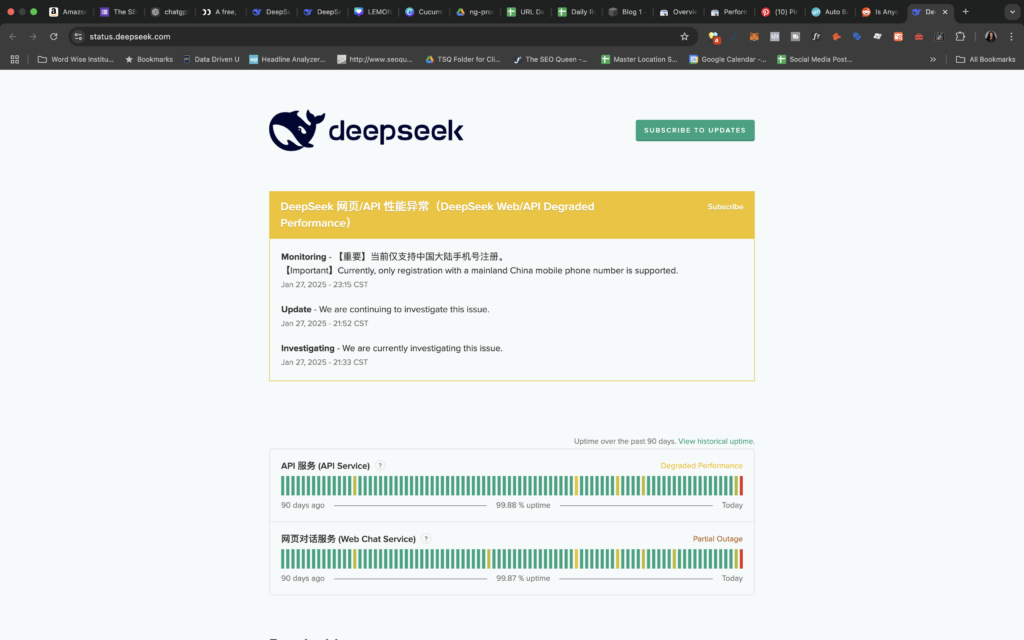

You have to to join a free account on the DeepSeek website so as to make use of it, nevertheless the corporate has temporarily paused new signal ups in response to "large-scale malicious assaults on DeepSeek’s providers." Existing users can sign up and use the platform as regular, however there’s no phrase but on when new customers will be capable to attempt DeepSeek for themselves. To get started with it, compile and set up. The way in which DeepSeek tells it, efficiency breakthroughs have enabled it to take care of excessive cost competitiveness. At an economical price of solely 2.664M H800 GPU hours, we full the pre-coaching of DeepSeek-V3 on 14.8T tokens, producing the currently strongest open-source base model. It is designed for actual world AI application which balances velocity, cost and efficiency. DeepSeek-Coder-V2, an open-supply Mixture-of-Experts (MoE) code language model that achieves performance comparable to GPT4-Turbo in code-particular tasks. If DeepSeek has a enterprise model, it’s not clear what that model is, precisely. Aside from creating the META Developer and enterprise account, with the whole workforce roles, and other mambo-jambo. Meta’s Fundamental AI Research workforce has lately published an AI mannequin termed as Meta Chameleon. Chameleon is flexible, accepting a combination of text and images as input and producing a corresponding mixture of textual content and pictures.

You have to to join a free account on the DeepSeek website so as to make use of it, nevertheless the corporate has temporarily paused new signal ups in response to "large-scale malicious assaults on DeepSeek’s providers." Existing users can sign up and use the platform as regular, however there’s no phrase but on when new customers will be capable to attempt DeepSeek for themselves. To get started with it, compile and set up. The way in which DeepSeek tells it, efficiency breakthroughs have enabled it to take care of excessive cost competitiveness. At an economical price of solely 2.664M H800 GPU hours, we full the pre-coaching of DeepSeek-V3 on 14.8T tokens, producing the currently strongest open-source base model. It is designed for actual world AI application which balances velocity, cost and efficiency. DeepSeek-Coder-V2, an open-supply Mixture-of-Experts (MoE) code language model that achieves performance comparable to GPT4-Turbo in code-particular tasks. If DeepSeek has a enterprise model, it’s not clear what that model is, precisely. Aside from creating the META Developer and enterprise account, with the whole workforce roles, and other mambo-jambo. Meta’s Fundamental AI Research workforce has lately published an AI mannequin termed as Meta Chameleon. Chameleon is flexible, accepting a combination of text and images as input and producing a corresponding mixture of textual content and pictures.

DeepSeek-Prover-V1.5 aims to address this by combining two highly effective methods: reinforcement studying and Monte-Carlo Tree Search. Monte-Carlo Tree Search, however, is a approach of exploring potential sequences of actions (on this case, logical steps) by simulating many random "play-outs" and using the outcomes to guide the search towards more promising paths. Reinforcement Learning: The system uses reinforcement studying to learn to navigate the search space of potential logical steps. Reinforcement studying is a type of machine learning the place an agent learns by interacting with an surroundings and receiving feedback on its actions. Integrate consumer feedback to refine the generated test data scripts. Ensuring the generated SQL scripts are useful and adhere to the DDL and data constraints. The primary mannequin, @hf/thebloke/deepseek-coder-6.7b-base-awq, generates natural language steps for data insertion. The primary downside is about analytic geometry. Specifically, we paired a policy model-designed to generate drawback solutions within the form of pc code-with a reward model-which scored the outputs of the policy mannequin. 3. Prompting the Models - The primary model receives a immediate explaining the desired end result and the provided schema.

DeepSeek-Prover-V1.5 aims to address this by combining two highly effective methods: reinforcement studying and Monte-Carlo Tree Search. Monte-Carlo Tree Search, however, is a approach of exploring potential sequences of actions (on this case, logical steps) by simulating many random "play-outs" and using the outcomes to guide the search towards more promising paths. Reinforcement Learning: The system uses reinforcement studying to learn to navigate the search space of potential logical steps. Reinforcement studying is a type of machine learning the place an agent learns by interacting with an surroundings and receiving feedback on its actions. Integrate consumer feedback to refine the generated test data scripts. Ensuring the generated SQL scripts are useful and adhere to the DDL and data constraints. The primary mannequin, @hf/thebloke/deepseek-coder-6.7b-base-awq, generates natural language steps for data insertion. The primary downside is about analytic geometry. Specifically, we paired a policy model-designed to generate drawback solutions within the form of pc code-with a reward model-which scored the outputs of the policy mannequin. 3. Prompting the Models - The primary model receives a immediate explaining the desired end result and the provided schema.

I pull the DeepSeek Coder mannequin and use the Ollama API service to create a immediate and get the generated response. Enroll here to get it in your inbox every Wednesday. Get began with CopilotKit utilizing the following command. Ensure you're using llama.cpp from commit d0cee0d or later. For prolonged sequentra effectively. Reasoning models take a bit of longer - usually seconds to minutes longer - to arrive at options compared to a typical non-reasoning mannequin.

If you liked this write-up and you would certainly like to obtain even more info regarding deep seek kindly browse through our web site.

댓글목록

등록된 댓글이 없습니다.