Bootstrapping LLMs for Theorem-proving With Synthetic Data

페이지 정보

Lacey 작성일25-01-31 14:40본문

American A.I. infrastructure-each referred to as DeepSeek "tremendous spectacular". The training run was primarily based on a Nous technique known as Distributed Training Over-the-Internet (DisTro, Import AI 384) and Nous has now published additional particulars on this strategy, which I’ll cowl shortly. With High-Flyer as one of its investors, the lab spun off into its own firm, additionally called DeepSeek. The authors also made an instruction-tuned one which does considerably better on a number of evals. There was a kind of ineffable spark creeping into it - for lack of a greater word, personality. AI is a confusing subject and there tends to be a ton of double-communicate and folks usually hiding what they actually suppose. There was a tangible curiosity coming off of it - a tendency in the direction of experimentation. "This run presents a loss curve and convergence charge that meets or exceeds centralized training," Nous writes. "This means we need twice the computing power to achieve the same results. Which means it is used for many of the same tasks, although exactly how effectively it works compared to its rivals is up for debate. I believe succeeding at Nethack is incredibly arduous and requires an excellent lengthy-horizon context system as well as an means to infer quite complex relationships in an undocumented world.

American A.I. infrastructure-each referred to as DeepSeek "tremendous spectacular". The training run was primarily based on a Nous technique known as Distributed Training Over-the-Internet (DisTro, Import AI 384) and Nous has now published additional particulars on this strategy, which I’ll cowl shortly. With High-Flyer as one of its investors, the lab spun off into its own firm, additionally called DeepSeek. The authors also made an instruction-tuned one which does considerably better on a number of evals. There was a kind of ineffable spark creeping into it - for lack of a greater word, personality. AI is a confusing subject and there tends to be a ton of double-communicate and folks usually hiding what they actually suppose. There was a tangible curiosity coming off of it - a tendency in the direction of experimentation. "This run presents a loss curve and convergence charge that meets or exceeds centralized training," Nous writes. "This means we need twice the computing power to achieve the same results. Which means it is used for many of the same tasks, although exactly how effectively it works compared to its rivals is up for debate. I believe succeeding at Nethack is incredibly arduous and requires an excellent lengthy-horizon context system as well as an means to infer quite complex relationships in an undocumented world.

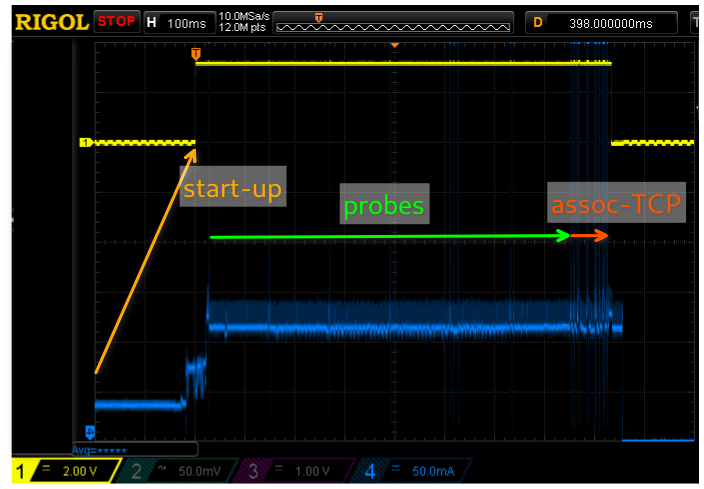

However, to solve advanced proofs, these fashions must be superb-tuned on curated datasets of formal proof languages. We don't advocate using Code Llama or Code Llama - Python to carry out normal pure language duties since neither of these models are designed to observe pure language instructions. Deepseek Coder V2: - Showcased a generic function for calculating factorials with error handling utilizing traits and higher-order capabilities. The code included struct definitions, strategies for insertion and lookup, and demonstrated recursive logic and error dealing with. Their product permits programmers to more simply combine varied communication strategies into their software and applications. AI startup Nous Research has published a very quick preliminary paper on Distributed Training Over-the-Internet (DisTro), a technique that "reduces inter-GPU communication necessities for each coaching setup without utilizing amortization, enabling low latency, efficient and no-compromise pre-training of massive neural networks over shopper-grade web connections using heterogenous networking hardware". CodeGemma: - Implemented a simple turn-based mostly game using a TurnState struct, which included participant management, dice roll simulation, and winner detection. Others demonstrated easy but clear examples of superior Rust utilization, like Mistral with its recursive strategy or Stable Code with parallel processing. We host the intermediate checkpoints of DeepSeek LLM 7B/67B on AWS S3 (Simple Storage Service).

However, to solve advanced proofs, these fashions must be superb-tuned on curated datasets of formal proof languages. We don't advocate using Code Llama or Code Llama - Python to carry out normal pure language duties since neither of these models are designed to observe pure language instructions. Deepseek Coder V2: - Showcased a generic function for calculating factorials with error handling utilizing traits and higher-order capabilities. The code included struct definitions, strategies for insertion and lookup, and demonstrated recursive logic and error dealing with. Their product permits programmers to more simply combine varied communication strategies into their software and applications. AI startup Nous Research has published a very quick preliminary paper on Distributed Training Over-the-Internet (DisTro), a technique that "reduces inter-GPU communication necessities for each coaching setup without utilizing amortization, enabling low latency, efficient and no-compromise pre-training of massive neural networks over shopper-grade web connections using heterogenous networking hardware". CodeGemma: - Implemented a simple turn-based mostly game using a TurnState struct, which included participant management, dice roll simulation, and winner detection. Others demonstrated easy but clear examples of superior Rust utilization, like Mistral with its recursive strategy or Stable Code with parallel processing. We host the intermediate checkpoints of DeepSeek LLM 7B/67B on AWS S3 (Simple Storage Service).

Shortly before this issue of Import AI went to press, Nous Research announced that it was in the method of coaching a 15B parametersigned to comply with US export controls - and spent $5.6m to prepare R1’s foundational model, V3. Released beneath Apache 2.0 license, it can be deployed domestically or on cloud platforms, and its chat-tuned version competes with 13B fashions. How good are the fashions? LLaMa everywhere: The interview also provides an oblique acknowledgement of an open secret - a large chunk of other Chinese AI startups and deep seek main companies are just re-skinning Facebook’s LLaMa fashions. Why this issues - compute is the only thing standing between Chinese AI corporations and the frontier labs within the West: This interview is the latest instance of how entry to compute is the only remaining issue that differentiates Chinese labs from Western labs.

If you liked this write-up and you would like to acquire additional data regarding ديب سيك kindly check out our own web site.

댓글목록

등록된 댓글이 없습니다.